Scientists set safety rules for potential weaponization of AI-designed proteins

Scientists establish safety guidelines to prevent potential weaponization of AI-designed proteins. Biosecurity measures address risks and ethical concerns.

Can artificial intelligence-designed proteins become bioweapons? To address this concern and preempt strict government regulations, researchers have initiated an effort advocating for the responsible and ethical utilization of protein design. David Baker, a computational biophysicist at the University of Washington, emphasizes that the current benefits of AI-driven protein design outweigh potential risks. Many other scientists utilizing AI in biological design have endorsed the initiative's commitments.

Mark Dybul, a global health policy expert at Georgetown University, welcomes the initiative, stating, "It's a good start. I'll be signing it." However, he emphasizes the necessity for government intervention and regulations, not solely relying on voluntary guidance. This initiative follows reports from various sources, including the US Congress and think tanks, examining concerns that AI tools like AlphaFold and powerful language models such as ChatGPT might facilitate the development of biological weapons, such as new toxins or highly contagious viruses.

Risk assessment

Governments are contending with the biosecurity risks associated with AI. In October 2023, US President Joe Biden issued an executive order, urging an evaluation of these risks and contemplating the potential requirement of DNA-synthesis screening for research funded by the federal government.Despite concerns, Baker is wary of government regulation in the field, fearing it could hinder the development of drugs, vaccines, and materials facilitated by AI-designed proteins. Diggans expresses uncertainty about how to regulate protein-design tools due to their rapid development pace, making it challenging to devise regulations that remain relevant over time.On the contrary, microbiologist David Relman at Stanford University believes that scientist-led initiatives are insufficient for ensuring the safe use of AI. He argues that natural scientists alone cannot adequately represent the broader public interests.

Designer-protein dangers

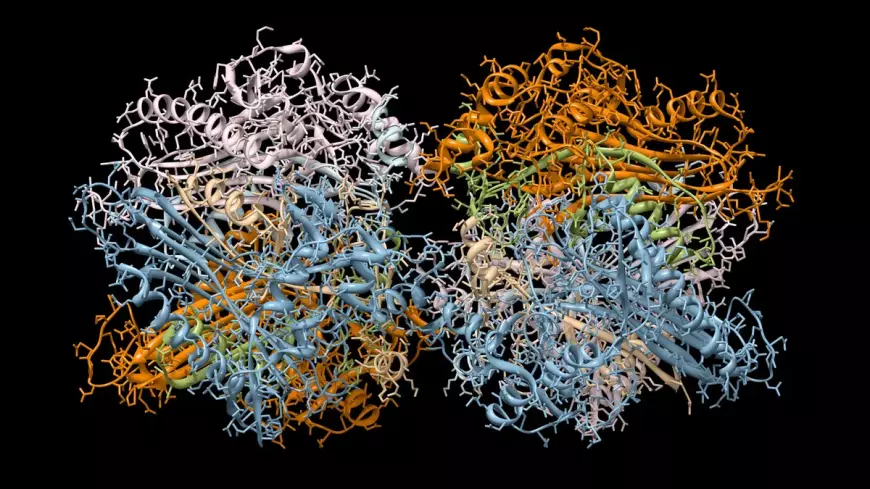

Researchers, including Baker and his team, have been involved in the design and creation of new proteins for many years. However, recent advancements in AI have significantly enhanced their capabilities. Tasks that were once time-consuming or considered impossible, like creating a protein that binds to a specific molecule, can now be accomplished in minutes. The majority of the AI tools developed by scientists for this purpose are readily accessible. In October 2023, Baker's Institute of Protein Design at the University of Washington organized an AI safety summit to assess the potential misuse of designer proteins. Baker states, "The question was: how, if in any way, should protein design be regulated and what, if any, are the dangers?"The initiative introduced by Baker and numerous scientists across the United States, Europe, and Asia encourages self-regulation within the biodesign community. This involves regular assessments of AI tool capabilities and monitoring research practices. Baker advocates for the establishment of an expert committee in his field to review software before widespread release, suggesting the implementation of 'guardrails' if deemed necessary.The initiative also emphasizes the need for enhanced screening of DNA synthesis, a crucial step in translating AI-designed proteins into tangible molecules. Currently, many service providers, affiliated with the International Gene Synthesis Consortium (IGSC), screen orders to identify potentially harmful molecules like toxins or pathogens.