Advanced voice mode in ChatGPT impresses testers with sound effects and realistic breathing

ChatGPT's Advanced Voice Mode wows testers with impressive sound effects and lifelike breathing, enhancing the overall user experience.

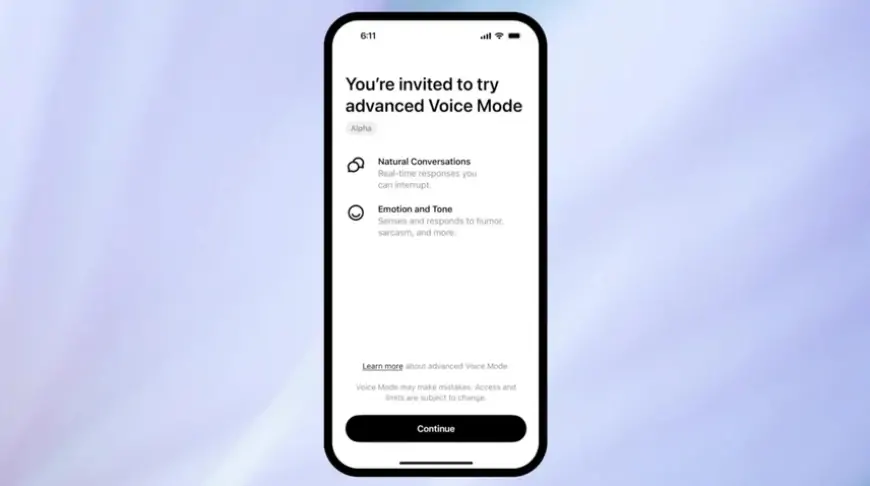

On Tuesday, OpenAI started releasing an alpha version of its new Advanced Voice Mode to a select group of ChatGPT Plus subscribers. Initially previewed in May alongside the GPT-4o launch, this feature is designed to make AI conversations more natural and interactive. Despite facing criticism in May for its simulated emotional expressiveness and sparking a public controversy with actress Scarlett Johansson over claims of voice replication, the feature has received mostly positive feedback in early tests shared on social media. Users with early access report that Advanced Voice Mode enables real-time conversations with ChatGPT, allowing interruptions mid-sentence and responding almost instantly. It also detects and reacts to users' emotional cues through vocal tone and delivery, and can include sound effects while narrating stories.

However, what has surprised many people is the way the voices simulate taking a breath while speaking.

"ChatGPT Advanced Voice Mode counting as fast as it can to 10, then to 50 (this blew my mind—it stopped to catch its breath like a human would)," wrote tech writer Cristiano Giardina on X.

Advanced Voice Mode simulates audible pauses for breath because it was trained on audio samples of humans speaking that included this feature. The model has learned to mimic inhalations at seemingly appropriate times after being exposed to hundreds of thousands, if not millions, of examples of human speech. Large language models (LLMs) like GPT-4o are master imitators, and this skill has now extended to the audio domain.

Giardina shared more impressions about Advanced Voice Mode on X, noting its capabilities with accents and sound effects.

"It’s very fast, there’s virtually no latency from when you stop speaking to when it responds," he wrote. "When you ask it to make noises, it always has the voice ‘perform’ the noises (with funny results). It can do accents, but when speaking other languages, it always has an American accent. (In the video, ChatGPT is acting as a soccer match commentator.)"

Regarding sound effects, X user Kesku, a moderator of OpenAI's Discord server, shared an example of ChatGPT playing multiple parts with different voices. Kesku also shared another example where a voice recounted an audiobook-sounding sci-fi story from the prompt, "Tell me an exciting action story with sci-fi elements and create atmosphere by making appropriate noises of the things happening using onomatopoeia."

Safety measures in advanced voice mode: OpenAI's precautions and challenges

An OpenAI spokesperson told Ars Technica that the company collaborated with over 100 external testers for the Advanced Voice Mode release, representing 45 different languages and 29 geographical areas. The system is designed to prevent impersonation of individuals or public figures by restricting outputs to OpenAI's four chosen preset voices.

OpenAI has also implemented filters to detect and block requests for generating music or other copyrighted audio, a problem that has plagued other AI companies. However, Giardina noted instances of audio "leakage" where unintentional music appeared in some outputs, indicating that OpenAI trained the AVM voice model on a diverse range of audio sources, possibly including licensed material and audio scraped from online video platforms.

Availability: Expanded access for ChatGPT plus users coming soon

OpenAI plans to extend access to more ChatGPT Plus users in the coming weeks, with a full launch for all Plus subscribers anticipated this fall. According to a company spokesperson, users in the alpha test group will receive a notice in the ChatGPT app and an email with usage instructions.

Since the initial GPT-4o voice preview in May, OpenAI claims to have improved the model's capacity to support millions of simultaneous, real-time voice conversations while maintaining low latency and high quality. This preparation aims to handle the anticipated demand, requiring significant back-end computation.